TLDR: A team from Faculty and Kainos introduced the concept of using synthetic data for computer vision to help address a common remote sensing data problem encountered by the UK’s Dstl. The customer needed to know if they could train algorithms before collecting data from a sensor that isn’t yet in use. With the help of the Rendered.ai platform, the team demonstrated that synthetic data offers opportunities for experimentation and testing beyond just supplementing real datasets.

When Faculty, a London-based company with expertise developing Artificial Intelligence algorithms, and Kainos, a UK-headquartered IT provider, participated in a project award by the UK’s Defence Science and Technology Laboratory (Dstl) that required innovation around applications to detect specific types of objects in satellite imagery, the team decided to explore some unusual approaches to solving their customer’s need.

The Dstl project team, part of the UK MOD’s Defence AI Centre, wanted to understand the likely performance and capabilities of computer vision algorithms for future satellite deployments – so data from the specific sensor was not yet available. On top of that, no current dataset exactly matched what the team needed to train on, so acquiring real datasets to represent a different kind of sensor capture would require additional postprocessing.

Case study: A team from Faculty and Kainos used Rendered.ai to introduce a novel training data type, synthetic data, to the UK’s Dstl for a study of capabilities around a future sensor type. (Actual examples of imagery produced for Dstl are not shown in this article.)

After some creative web searching, Francis Heritage, Business Development Manager at Faculty, came across Rendered.ai and the concept of physically accurate synthetic data for AI training. Rendered.ai offers a cloud hosted platform for customers who want to generate 100% accurately labeled simulated computer vision data. Rendered.ai’s approach is to combine physically accurate lighting and sensor characteristics with 2D and 3D assets assembled in a virtual scene that simulates a sensor capture scenario. The platform allows customers to initiate jobs that run sensor capture scenarios over and over with randomization to generate fully labeled datasets. It also turned out that Rendered.ai’s early production work that established the proof points for building their platform was based upon object detection in synthetic satellite imagery.

Like most computer vision project teams, the Faculty and Kainos team first looked into acquiring an existing dataset that was similar to their study problem. Purchasing real satellite data would have meant acquiring a fixed number of images from a pre-existing dataset with labeled objects that were somewhat similar to the objects in the Dstl study. A real dataset from one of the existing satellite imagery vendors would likely be available resold to other analytics providers and would not be likely to capture the objects that Dstl wanted to be able to detect. In most cases, real sensor datasets would not also come along with redistribution rights such that Faculty would be at risk of not being able to deliver the processed imagery along with their analysis.

After engaging with Rendered.ai and viewing demos of synthetic satellite imagery channels, the Rendered.ai term for synthetic data applications, the Faculty and Kainos team ended up choosing to purchase a term-based subscription of the cloud platform to fit their project timeline. Rendered.ai typically sells one-year subscriptions to their PaaS, however the company was willing to work within government project-determined timelines. For this engagement, Faculty opted for a high-end subscription model in which the Rendered.ai team provides initial setup services and some ongoing support for the synthetic data channel that the customer is using.

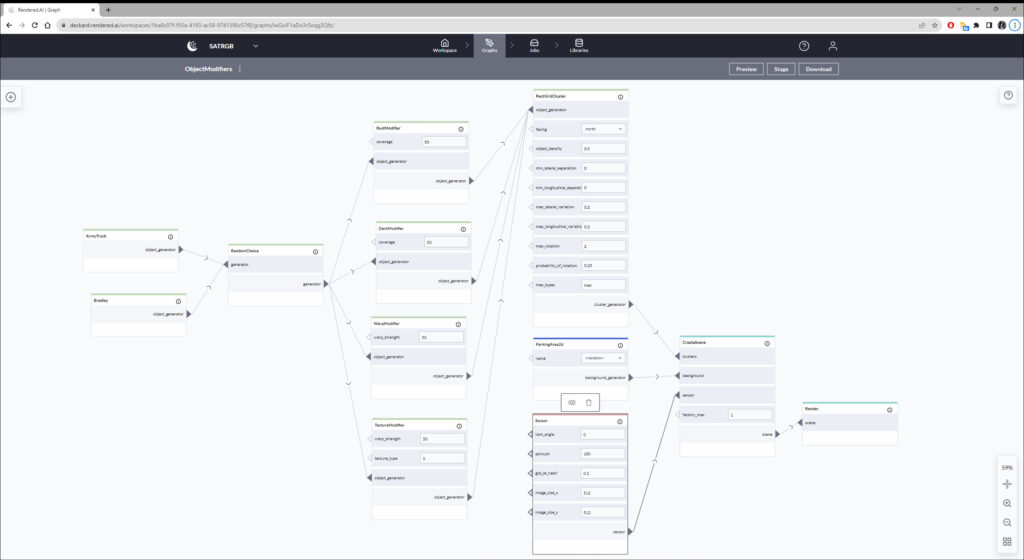

Rendered.ai provides multiple interfaces for generating synthetic data for computer vision including a web UI, typically used by data scientists who will configure synthetic data runs, and a python SDK, most often used by customers who want to integrate continuous synthetic data generation into a data pipeline.

A graph in Rendered.ai showing modifiers to create random damage to vehicles. Graphs allow data scientists to quickly configure and spin up jobs to create entirely new, fully labeled sets of synthetic data for computer vision algorithm training.

Getting started with the Rendered.ai platform was quick, even in comparison with acquiring a large existing dataset. The cost of the subscription turned out to be about one quarter of the cost of some of the datasets that Francis has scoped out when shopping around for options and at the end of the project, his team had generated much more data than they would have been able to acquire from real sensor data. Beyond that, the team realized along the way that they would not have been able to easily modify real sensor datasets to the project-specified parameters. Ultimately, this meant that with Rendered.ai, the team was able to convey to Dstl that they had a high confidence in the ability to train on the specific sensor data that was specified for the objects of interest that were needed.

Because Rendered.ai operates in public cloud with standard terms of service, Faculty was able to more easily propose using a commercial PaaS than they would have been able to suggest a proprietary project-based setup of an inhouse synthetic data pipeline. Public cloud PaaS and SaaS can offer a cheaper alternative to government cloud or private cloud setup and, a big advantage of synthetic data, there’s nothing intrinsically secret or secure about fake datasets that prevent them from being generated outside secure facilities.

The Faculty and Kainos team found some unexpected benefits of working with Rendered.ai. Most data science teams are handed datasets, given a business problem, and then need to somehow manipulate the datasets to address some part of the needed analysis. With a synthetic data platform, Faculty’s data scientists were able to imagine new scenarios and dataset parameters, reconfigure the synthetic data runs, and then generate more data. When the customer came back and requested that Faculty come up with some mechanism to demonstrate that they could predict failure given a different distribution of events in the data, not only was the Faculty team able to control the distribution of objects observed in simulated output, they were also able to create abnormal test cases for definite failures, such as by coloring all of the objects of interest absurd colors using simple modifiers in the Rendered.ai tool kit.

Highlighting the experience of Rendered.ai, Francis reported, “My two Ph.D. level data scientists were always in this thing, even though I didn’t want them generating images, I wanted them doing data science. They got it. They ended up generating their own images and owning the workflow themselves.”

Examples of synthetic computer vision training images with (bottom row) and without (top row) a color modifier on vehicles added to the scene. Simple effects like this are useful for experimentation with synthetic data. More complex effects such as vehicle damage, paint aging, rust, and other modifications, including vehicle placement variation, can be configured through the Rendered.ai web interface.

During the project, the Faculty and Kainos team also found a few experience issues that were communicated back to the Rendered.ai development team. As a result, Rendered.ai now has more interactive validation when configuring graphs that describe visually how synthetic data will be generated. Based on the feedback from Faculty and other customers, the web interface also now has better tools for inspecting datasets, with the intent to allow data scientists to more quickly inspect how their synthetic data is being generated and annotated.

The project was directly awarded to Kainos Group plc and Faculty was a subcontractor to Kainos. At the end of the project, Faculty was able to deliver to not only their analytical results, but also the datasets that were generated with Rendered.ai in the event that other teams in the Kainos or Dstl community could use them to explore algorithm development. Sharing datasets would have been less likely with an acquired real sensor dataset. In addition, it would be easy to setup a similar channel in Rendered.ai if needed on a future project.

Like most of the industry, the Rendered.ai and Faculty teams are both seeing a surge in interest in computer vision applications. With the proliferation of sensors above and on the Earth, there is more imagery being collected in a day than can be analyzed by human eyes alone. Emerging novel sensor modalities and platforms offer greater opportunities for detection but also incur greater costs for accurate labeling and innovation which may be offset by the application of physically accurate synthetic imagery. New technologies such as Generative AI are introducing tools for both generating synthetic data and for attempting to defeat algorithmic detection. Both companies are conducting active research to be able to advise their customers who need to focus on critical geopolitical and environmental issues and not on the latest technology advances in the fast-moving AI market.

Acknowledgements

We thank Francis Heritage and the Dstl, Faculty, and Kainos team for reviewing and allowing us to share this story.

About Faculty

Faculty, headquartered in London, transforms organisational performance through safe, impactful and human-led AI. We have over 10 years’ experience helping customers reap AI’s benefits whilst managing the risks. Founded in 2014 with a training programme to help academics become data scientists, we now provide over 300 global customers with software, bespoke AI consultancy, and an award-winning Fellowship programme. Our expert team includes leaders from across government, academia and global tech brands. We have raised over £40m from investors including The Apax Digital Fund, LocalGlobe, GMG Ventures LP, and Jaan Tallinn, one of Skype’s founding engineers. www.faculty.ai

About Kainos Group plc

Kainos Group plc is a UK-headquartered IT provider with expertise across three divisions – Digital Services, Workday Services, and Workday Products. Our Digital Services division develops and supports custom digital service platforms for public sector, commercial, and healthcare customers. Our solutions transform the delivery of these services, ensuring they are secure, accessible and cost-effective, and provide better outcomes for users. Our people are central to our success. We employ more than 2,900 people in 22 countries across Europe and the Americas. We are listed on the London Stock Exchange (LSE: KNOS) and you can discover more about us at www.kainos.com.