Rendered.ai and the Rochester Institute of Technology’s Digital Imaging and Remote Sensing (DIRS) Laboratory have partnered to provide DIRSIG’s simulation capability within the Rendered.ai PaaS. This partnership empowers customers to generate physically accurate synthetic data at scales required to train artificial intelligence and machine learning algorithms. Rendered.ai has already deployed DIRSIG for notable customer applications. Together, the Rendered.ai and RIT team can support customers looking for synthetic data in domains from remote sensing satellite imagery to drone and ground-based sensor data collection.

DIRSIG: A History of Physics-Based Excellence

For over three decades, an expert team at RIT’s DIRS Lab has developed and enhanced a unique simulator toolkit. Known as DIRSIG™, the toolkit enables users to configure and generate synthetic data and simulate physically accurate imagery and rich descriptive metadata as if it had been captured by a real, physical sensor.

DIRSIG evolved from a need by some of the original sponsors, primarily government agencies, for accurate examples of imagery before sensors were deployed in the field. DIRSIG became used for simulating sensor data captured over large agricultural and urban environments, experimenting with different types of sensor properties on spacecraft, and for simulating data from conflict zones. Users also applied DIRSIG for a wide variety of research and training needs when real data acquisition would be impractical because of cost, sensitivity of data, or required acquisition time.

The primary adoption of DIRSIG over its first couple of decades of development has been by individuals or small teams of users in specialized organizations. Simulation and sensor experts typically deployed DIRSIG to generate data representing datasets of images used for experimentation, validation of specific sensor configuration, and training analysts to recognize different features in imagery. During this time, sensors of all types proliferated in the broader market and more sophisticated machine learning techniques emerged for training algorithms to interpret spatial and/or spectral patterns in imagery.

Learn more about DIRSIG through this interactive guide.

Computer Vision Meets Simulation

Computer vision, the application of machine learning to imagery analysis, has significantly impacted many aspects of our daily lives. Computer vision enables faster grocery checkout, powers driver alertness systems, and allows us to unlock our phones with a glance. To train computer vision algorithms accurately, data scientists need thousands or even millions of instances of accurately labeled data that fits the training scenarios, sensor types, and business problems being addressed.

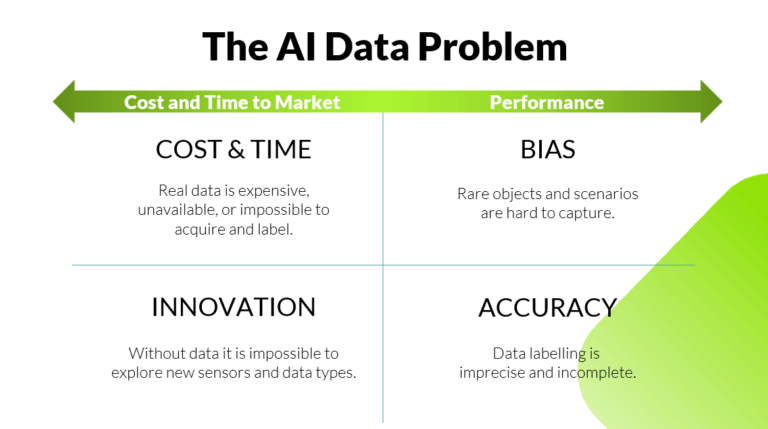

Contrary to many popular expectations, data is not free, nor is it always easy to collect. Imagery data can be expensive to acquire, hold intrinsic bias due to the inability to capture rare objects and events, and can have privacy or security concerns. In some cases, data may not be able to be acquired at all, such as when a new sensor is still in research and development.

Imagery simulation tools such as DIRSIG, NVIDIA Omniverse, and other applications make it possible to use 3D and physically accurate models to create artificial, or synthetic data that emulates the properties of real data. Generating synthetic data happens on demand and is typically less expensive than real data collection. Synthetic data can also be modeled on researchers’ designs for future sensors, enabling simulation teams to generate and test data before new sensors are manufactured.

Generating Synthetic Data as a Productized Workflow

Machine learning requires large sets of data, which can be challenging to collect or create. Over the past decade, innovative teams have experimented with tools, such as DIRSIG, to simulate datasets for training and validating machine learning algorithms. Data creation efforts focused on single simulators typically end up built out as custom projects that require setup and configuration of hardware, software, 3D and 2D content, and physics-based models. For smaller companies and organizations, custom projects require a burden of know-how and resources to set up simulation, computer vision, and infrastructure to scale jobs for large datasets.

Conversely, some of the large tech companies investing in autonomy and deep learning recognized the need for synthetic data because of the vast quantities of data they needed. Many of the largest companies developing search and autonomy deployed sizeable teams to build customized synthetic data pipelines within their proprietary tech infrastructure. Compared to smaller organizations, large companies with existing investments in computer vision and scalable infrastructure have a lower barrier to entry into synthetic data generation. Adding skill and tech for simulation is a more focused exercise for large organizations who may also have more flexibility to invest in alternative techniques for data acquisition to achieve their computer vision objectives.

For most of the market adopting computer vision, the most significant barrier to developing and deploying synthetic data generation is the additional technology and staffing required to implement, scale, and manage data generation. Platform as a Service (PaaS) applications abstract and simplify workflows on the cloud so that organizations can focus on their primary work and business problems.

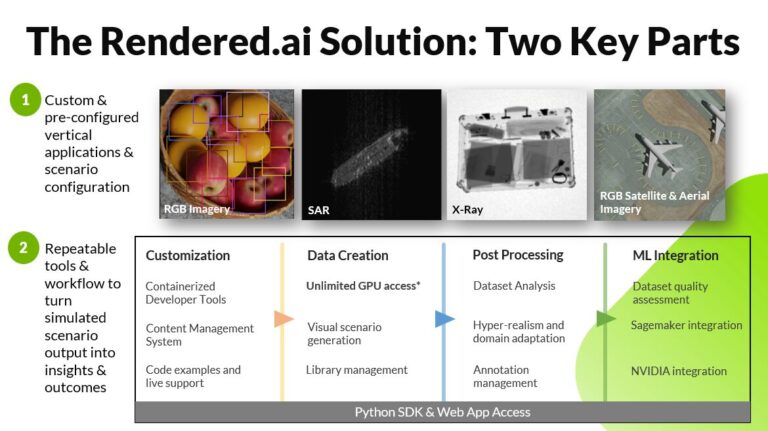

The Rendered.ai platform was created to help customers reduce the burden of technology and skill required to generate synthetic data in the cloud. As a PaaS, Rendered.ai streamlines and simplifies the process of setting up infrastructure for synthetic dataset generation. By implementing a workflow that enables iterative job configuration and execution on the cloud without the need to implement cloud infrastructure, Rendered.ai customers can focus on generating and comparing datasets to address their algorithm training and validation needs.

“The Rendered.ai PaaS unlocks the power of DIRSIG for users needing to generate data for algorithm training or sensor trade studies. The Rendered.ai and RIT team has demonstrated that it can rapidly develop and deploy custom-tailored data generation solutions that allow customers to produce data faster than if they tackled creating these complex workflows in-house.”

Scott Brown, Ph.D.Modeling and Simulation Lead, RIT

RIT and Rendered.ai

One of the many uses of synthetic data is for innovation in circumstances when customers may not be able to obtain data, such as before a sensor has been deployed in the field. At the start of their R&D process, the Planet Labs team experimented with DIRSIG to model their upcoming Tanager satellite’s hyperspectral data collection. The technical skills required to run and maintain DIRSIG meant that only a few Planet staff could create data. Rendered.ai was introduced to the team to solve this problem.

As a customer of Rendered.ai, Planet worked with the team to create and validate synthetic data to the exact specifications of the Tanager hyperspectral sensors so that physically accurate simulated data could be used for scientific and business development purposes. The Planet team generated hyperspectral imagery with examples of diverse environments and phenomena, such as methane plumes, for training algorithms. The Planet team was also able to effectively demonstrate the planned hyperspectral data acquisition and processing pipeline to customers before the satellite even launched.

Learn more about Planet Labs’ use of the Rendered.ai platform in this case study.

Through an agreement with the DIRS Lab, Rendered.ai developed a commercial capability to provide customers access to the DIRSIG simulator within the Rendered.ai platform. Offering DIRSIG as a packaged synthetic data channel on Rendered.ai reduces the training requirement for a customer to obtain DIRSIG directly from RIT. As a PaaS offering, using DIRSIG on the web eliminates most of the need for customers to build their own technical stack to support simulation. Customers also benefit from having experienced team members from both RIT and Rendered.ai work together to develop simulation capability that fits each customer’s needs.

Rendered.ai customers have used DIRSIG for various exploration and innovation use cases. While we often cannot disclose specific customer stories, the range of application of DIRSIG through Rendered.ai has included imagery and algorithm development for fire detection, drone-collected imagery for field asset inspection, and co-registered infrared and visible light sensor simulation in remote sensing imagery for military asset identification.

An Open Result

An exciting development in the Rendered.ai and DIRS Lab relationship is a new project to release an open-source Python library for scripting the setup of DIRSIG scenes. Rendered.ai developed the API with input from the RIT team to help customers create content more easily with familiar Python instructions. We’ll have more to share on this in the future.

What’s Next?

As the computer vision market matures, we hear more and more about the need for synthetic data. Notable technologists, such as NVIDIA’s CEO, Jensen Huang, have acknowledged this need, for example, in his GTC 2024 keynote address this March.

More diverse solutions are coming to the market to help overcome data. We at Rendered.ai and others are testing Generative AI, for example, to create synthetic data from existing datasets. Research has also improved the application of Large Language Models to detect and classify objects and imagery, such as by discovering new techniques to improve inference with LLMs in focused domains through secondary training or filtering. Even with these emerging tools, in cases such as when a novel object needs to be identified, or a technically sophisticated data type needs to be emulated, LLMs and Generative AI models aren’t sufficient because they rely on needing vast quantities of data, which are often not available for these applications.

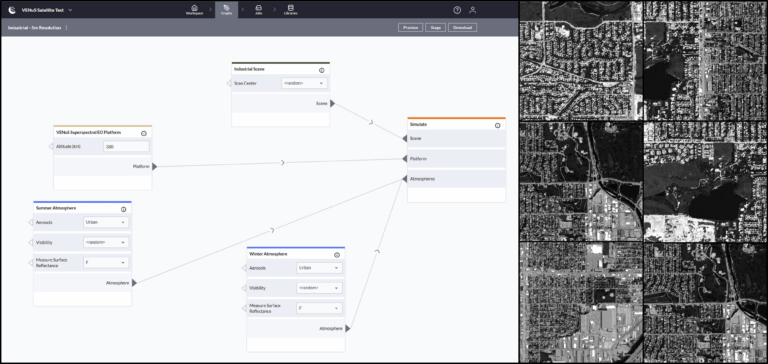

In addition to customer projects, Rendered.ai has been able to apply DIRSIG to problem sets, such as generating synthetic data as standardized ‘Analysis Ready Data’ for the Open Geospatial Consortium’s Testbed-19. The Rendered.ai team could also use our DIRSIG integration to emulate existing commercial sensors, such as the VENuS satellite, in just a matter of hours.

Explore and interact with the VENuS Sensor by requesting a free trial of the Rendered.ai platform!

Every year, we collect exponentially more imagery while constantly expanding the range of sensor technologies and types. Physics-based synthetic data is essential for training algorithms to process real sensor data. Rendered.ai and RIT’s DIRS Lab are committed to collaboratively supporting our customers through exploring new use cases and domains for the application of DIRSIG’s validated, physically accurate simulation powered by scalable synthetic data generation.